DRAFT: Solar Energy Statistics e-ink display

For Christmas last year, I wanted to make a present for my Mum. My parents had recently had solar panels installed with a battery.

The system worked, so I was told, that the solar power would:

- primarily power the house; then

- charge the battery; once filled

- heat water for the hot water tank

She wanted to be more energy efficient, but knowing when to use the dishwasher and when to have a shower could be complex. On top of this, the suppliers provide phone apps (and APIs… more on that later) for monitoring the system. However, one supplier provided information on the solar panels. Another provider monitored the batteries and another provided information on the energy being consumed and supplying back to the grid. Because of this, actually working out what was going on meant using a handful of applications, all of which displayed the information differently.

I saw inspriation online of people putting e-ink displays behind mirrors, in frames and various other situations around the house, making them look elegant - in comparison to the “smart energy meters” - a plastic device that brights shows you how much you’re damaging the earth and putting a dent in your wallet, every second that passes.

Designing

To get a rough idea for cost and feasability, I took a look at e-ink displays. I found Waveshare have a lot of options available and a 7.5" display that supports black, white and red seemed ideal - about the size of a photo frame and only ~£70.

I had a couple of Raspberry PIs laying around, so I ordered the display and waited for it to arrive. Once it arrived, I gave it a test and was able to run some demo scripts. But, for the present, I didn’t feel great sticking an ancient Raspberry PI into it and the cost, at the time, was about the same cost as the display. I did, however, see the Raspberry Pi Pico W - a much smaller alternative, with Wifi(!) and only £4 - such an incredible steal that I quickly purchased five of them!

Wasting API calls

I had also been looking at the APIs, rate limiting and other information and found that, for the information I wanted, I would end up using most of thier accounts API requests and the result being a point-in-time display of the statistics.

So I decided to pivot to a server-client model - I’d write an app that would run on a server, which would have two components:

- Stats gathering - a cron-esque application that would periodically gather data from the various providers' APIs

- Web endpoint - this would be provide an endpoint for the display to gather what it needed

The data storage between these would be influxdb and would also allow me to deploy grafana to create some dashboards for my parents to view historical data, making all of the API requests eventually worthwhile.

Memory limitations

At this point, I was planning on having the data available to the Raspberry PI and it would gather the current data from one place and generate a display.

The built-in libraries (provided by Waveshare) had the ability to print text and draw basic shapes. Whilst putting together some designs, I quickly realised that I wanted to avoid just a textual display - I wanted diagrams and graphics as well.

I started looking at alternative means to drawing images, such as

However, with the resolution of 800x480, even a 1-bit colour depth would be an uncompressed 375KB - ignoring any other code (to display and interact with APIs), I’d have used 72% of the memory for just image!

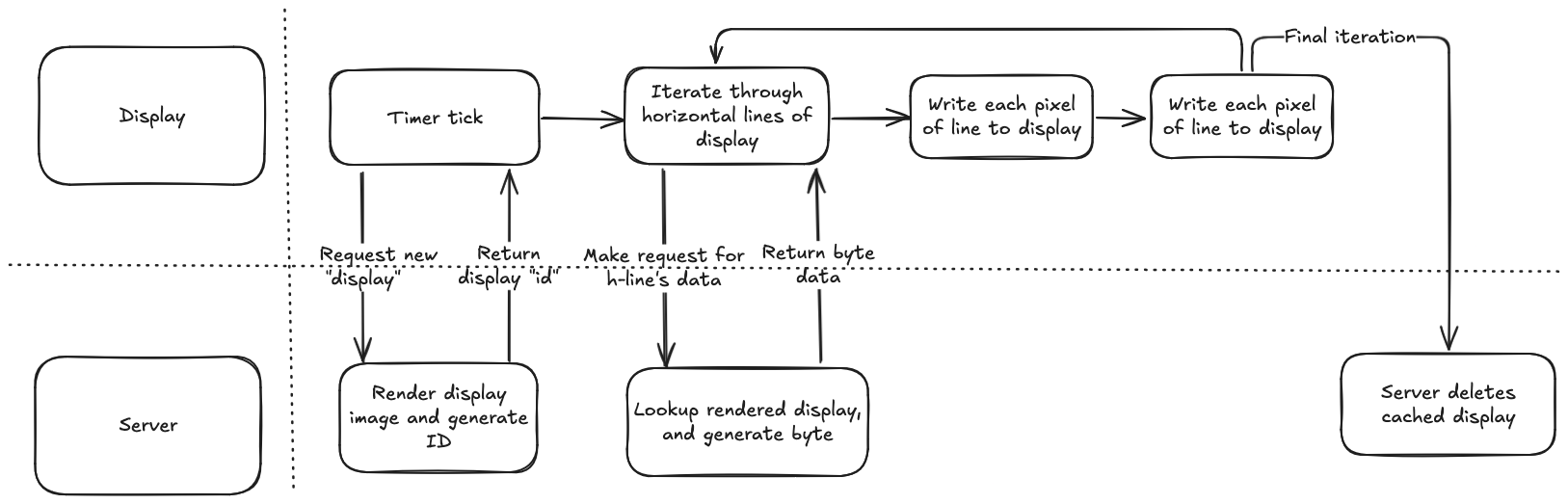

Seeing that the e-ink library contained EPD_7in5_B.imageblack.pixel() and EPD_7in5_B.imagered.pixel to set individual pixel colours, the plan changed to render the display on server-side and return the data line-by-line to the display.

The new process would look something like this:

Gathering Data

Whilst, I’m not going to go too much into detail about getting the data for individual providers, because the information is very provider-specific and probably not of much use, I will mention that when I reached this point, I had no idea what product I actually wanted to use for storing the data, so I started by just creating a base class for a data store and writing a mocked implementation that would perform the required actions:

class DataPoint:

def __init__(self, key: str, value: float, timestamp: Optional[datetime]=None):

"""Data point to insert into database"""

self._timestamp = datetime.datetime.now() if timestamp is None else timestamp

self._key = key

self._value = value

@property

def key(self):

"""Return key"""

return self._key

@property

def value(self):

"""Return value"""

return self._value

@property

def timestamp(self):

"""Return timestamp"""

return self._timestamp

class DataStore:

def __init__(self):

"""Connect to data store"""

self._connection = self.connect()

def connect(self) -> None:

"""Connect to datastore"""

raise NotImplementedError

def store_values(self, data_points: List[DataPoint]) -> None:

"""Store datapoints in database."""

raise NotImplementedError

def get_latest_value(self, name: str) -> float:

"""Get latest value from datastore"""

raise NotImplementedError

class DebugPrintDataStore(DataStore):

"""Print out values instead of storing."""

def connect(self) -> None:

"""Do nothing"""

pass

def store_values(self, data_points: List[DataPoint]) -> None:

"""Print stored values"""

for data_point in data_points:

print_debug("Storing datapoint: ", data_point.timestamp, ":", data_point.key, ":", data_point.value)

def get_latest_value(self, name: str) -> float:

"""Return random value"""

return 1.232

This was really useful when trying to interact with the APIs and was a nice abstraction to just allow (what turned out to be InfluxDB) to be just dropped into place.

A script, dedicated to gathering the data, then used Python schedule library to setup intervals for gathering the data.

Generating Displays

I opted for Pillow (PIL) for generating the displays. It has lots of support for complex image manipulation and something that I’ve used before, so seemed like a good choice (especially given the looming deadline).

I created a basic FrameBuffer class, which would hold the Pillow image and provides a text() method for drawing text in a standardised method.

To transfer the data to the Raspberry PI, I used a 2-bit/pixel byte array, which indicated black/white/red and dumped the bytes from PIL:

def to_byte_array(self, line_number):

"""Convert image to byte array"""

image_data = self.image_data[(line_number * self.WIDTH):((line_number + 1) * self.WIDTH)]

# Convert each pixel to 2 bits -

# - 00 - white

# - 01 - black

# - 10 - red

# storing 4 pixels per byte

output_data = b''

current_byte = 0

current_pixel = 0

for itx, pixel_value in enumerate(image_data):

if pixel_value == self.RED:

current_byte |= (1 << ((current_pixel * 2) + 1))

elif pixel_value == self.WHITE:

# Do not set any values for white

pass

else:

# Treat all other colours as black, if the pillow library has

# caused any merging of colours etc.

current_byte |= (1 << (current_pixel * 2))

# If last pixel for the byte, push byte to image data array

# and reset current pixel/byte.

# Also do this for the final pixel, so the byte is pushed to the array

if current_pixel == 3 or itx == len(image_data):

output_data += current_byte.to_bytes(length=1, byteorder='little')

current_byte = 0

current_pixel = 0

else:

current_pixel += 1

return output_data

Given all of the logic was now running outside of the Raspberry PI, it meant that I could incredibly easily mock up the logic inside the Raspberry PI to preview the work:

image = Image.new("RGB", (800, 480), (0, 0, 255))

draw = ImageDraw.Draw(image)

SERVER = 'http://localhost:5000'

res = requests.get(f'{SERVER}/generate')

id_ = res.json().get('id')

for h in range(480):

res = requests.get(f"{SERVER}/screen/{id_}/{h}")

screen_data = bytes(res.content)

for w in range(800):

pixel_itx = w

bytes_index = floor(pixel_itx / 4)

byte_index = pixel_itx % 4

pixel_data = int((screen_data[bytes_index] & (3 << (byte_index * 2))) >> (byte_index * 2))

# if w < 12 and h == 0:

# print("Byte", screen_data[bytes_index])

# print("pixel", pixel_itx)

# print("data", pixel_data)

colour = (255, 255, 255)

if pixel_data == 1:

colour = (0, 0, 0)

elif pixel_data == 2:

colour = (255, 0, 0)

image.putpixel((w, h), colour)

image.show()

Initial test

At this point, I was ready to test this in practice. Though I’d done some basic tests of Python code on the Raspberry PI, this would be the first test of the Raspberry PI pulling data from the server: –INSERT IMAGE–

Extending Displays

With the FrameBuffer and a base Display class in place, I was able to write simple displays quite quickly and easily. For example, a simple display that would obtain the latest news article from an API and draw it to the display looked like:

class NewsArticle(BaseDisplay):

"""News Artcile display"""

SHOW_IN_ROTATION = True

# Cache of news articles, which will be cycled through

# before obtaining new articles from API

NEWS_ARTICLES = []

@classmethod

def _get_article(cls):

"""Obtain an article, re-obtaining from API if necessary"""

if not cls.NEWS_ARTICLES:

cls.NEWS_ARTICLES = WorldNewsApi().get_news_articles()

return cls.NEWS_ARTICLES.pop(0)['title']

def draw(self):

"""Draw"""

article = self._get_article() or "Could not obtain news article"

self._framebuffer.text("Latest News", (320, 40))

self._framebuffer.text(

article,

(40, 100),

split_multiline=True,

max_width=600)

Note the split_multiline and max_width attributes, which I’d implemented to handle the relatively low resolution of the display - aside from stopped the display from leaking across the end of the screen, it also allows for text to boxed into a particular boundary on the display (to stop it overwriting over elements) but also attempted to split by the nearest word boundary.

I was then able to start pulling in images - I started by gathering the images I wanted to display and converted them to 1-bit colour depth and using PIL could just “paste” them onto the framebuffer:

image = Image.open(image_path)

image = image.resize((resize_x, resize_y))

self._framebuffer._image.paste(image.copy(), (x, y))