Gitlab Ephemeral Environments for Pull Requests

Background

I maintain a handful of open sources projects - most of which are of no interest to anyone.

There are one or two, however, that have a small handful of users - and also a small number of contributors.

Because of this, I spent a very inconsistent amount of time on each of these, occasionally fixing bugs and occasionally spending an hour or two each for a week to get a feature done.

Since some of these projects do have users - these people have become a combination of things for me:

- Stakeholders

- Bug reporters

- Code reviewers

- Code contributors

Problem

I may often implement a change and wonder what others think - it’s hard to ask someone I’ve nevber met, speak to via a one line comment every 2-6 months to ask them to spend valuable time on a code review to have input of a change.

So I wanted to have per-PR deployment environments, so I can create a PR and they can simply view the branch on a running instance.

My requirements for this project were:

- Integration with self-hosted Gitlab

- Each PR would automatically create an environment without intervention

- Each PR would automatically (and reliably) destroy itself on PR close/merge

- Run on nomad

- Be isolated from any other infrastructure (much of the infrastructure already is)

- Avoid using Gitlab docker registry (since I back this up and ~1GB/PR would be incredibly expensive!)

- Not require any changes in:

- External DNS (since this is bind and a manual change)

- External load balancers (again a manual change in HAProxy)

- Provide a tidy solution - it should use Terraform to deploy - but the Terraform shouldn’t clutter the real repository.

- Any authentication should be created as dynamically as possible, avoiding lots of credentials hard coded in pipeline secrets.

Setting up nomad

Since I’ve started a long journey to migrating from a variety of other tools, such as kubernetes (running via rancher), docker swarm+portainer etc. to nomad, I have a Terraform module for setting up nomad.

I start by creating a new virtual machine:

module "zante" {

source = "terraform-registry.internal.domain/cosh-servers-zante__dockstudios/libvirt-virtual-machine/libvirt"

version = ">= 1.1.0, < 2.0.0"

name = "zante"

ip_address = "10.2.1.13"

ip_gateway = "10.2.1.1"

ip_prefix_length = 24

nameservers = local.dns_servers

memory = 3 * 1024

disk_size = 20 * 1024

base_image_path = local.base_image_path

lvm_volume_group = local.volume_groups["ssd-raid-storage"]

hypervisor_hostname = local.hypervisor_hostname

hypervisor_username = local.hypervisor_username

docker_ssh_key = local.ssh_key

ssh_key = local.ssh_key

domain_name = local.domain_name

http_proxy = local.http_proxy

https_proxy = local.http_proxy

no_proxy = local.http_no_proxy

NO_PROXY = local.http_no_proxy

# Connect to isolated network

network_bridge = local.network_bridges["gitlab-pr-isolated-network"]

# Install docker, which will be used for configuring nomad

install_docker = true

create_host_records = false

# Create directories for docker data (though this probably won't be needed)

# and nomad data directories

create_directories = [

"/docker-data",

"/nomad",

"/nomad/config",

"/nomad/config/server-certs",

"/nomad/data"

]

}

Once the machine is created, nomad and traefik can be setup and configured on it:

module "server" {

source = "terraform-registry.internal.domain/gitlab-env-nomad__dockstudios/nomad/nomad//modules/server"

version = ">= 1.1.0, < 2.0.0"

hostname = "zante"

domain_name = "internal.domain"

docker_username = "docker-connect"

nomad_version = "1.6.3"

consul_version = "1.17.0"

http_proxy = var.http_proxy

aws_endpoint = var.aws_endpoint

aws_profile = var.aws_profile

container_data_directory = "/docker-data"

primary_network_interface = "ens3"

}

module "traefik" {

source = "terraform-registry.internal.domain/gitlab-env-nomad__dockstudios/nomad/nomad//modules/traefik"

version = ">= 1.0.0, < 2.0.0"

cpu = 64

memory = 128

# A wildcard SSL cert for *.gitlab-pr.internal.domain will be created

# and a CNAME of *.gitlab-pr.internal.domain will point to the nomad server.

# Traefik will be configured with a default service rule of Host(`{{ .Name }}.gitlab-pr.dockstudios.co.uk`)

base_service_domain = "gitlab-pr.dockstudios.co.uk"

nomad_fqdn = "zante.internal.domain"

domain_name = "internal.domain"

service_names = ["*.gitlab-pr"]

}

Application deployments

Now that we have a nomad instance running, we can look at how the application will be deployed.

Either:

- We can have generic terraform to deploy any application and pass through a lot of variables in the Gitlab CI pipeline

- This will lead to lots of sensitive information being stored in CI and lots of pass through variables in Gitlab CI.

- Changes would require: Common terraform updating, the Gitlab CI config in the OSS repo AND adding any values manually into the Gitlab

- Dedicated terraform for the project:

- Although this can use a common module, this will add overhead when adding this deployment pipeline to new projects.

- Store the terraform in the repo

- This will likely cause confusion to anyone viewing the repo, since the Terraform will be quite specific to the CI pipeline.

I chose to go with a dedicate repo per application.

For the basis of this test, I’ll bne creating this for Terrareg.

The basic Terraform for deploying a nomad service will look like:

resource "nomad_job" "terrareg" {

jobspec = <<EOHCL

job "terrareg" {

datacenters = ["dc1"]

type = "service"

group "web" {

count = 1

network {

mode = "bridge"

port "http" {

to = 5000

}

}

service {

# We will need to update this

name = "terraform-registry"

port = "http"

provider = "nomad"

# Use tag to indicate to traefik to expose the service

tags = ["traefik-service"]

check {

type = "tcp"

interval = "10s"

timeout = "1s"

}

}

task "terrareg-web" {

driver = "docker"

config {

# We will need to update this

image = "some"

ports = ["http"]

# We won't mount volumes, as this is an ephemeral environment

}

env {

ALLOW_MODULE_HOSTING = "true"

ALLOW_UNIDENTIFIED_DOWNLOADS = "true"

AUTO_CREATE_MODULE_PROVIDER = "true"

AUTO_CREATE_NAMESPACE = "true"

PUBLIC_URL = "https://terrareg.example.com"

ENABLE_ACCESS_CONTROLS = "true"

MIGRATE_DATABASE = "True"

# This does not need to be secret, as it's a public environment

ADMIN_AUTHENTICATION_TOKEN = "GitlabPRTest"

# We will use the default SQLite database

# DATABASE_URL = ""

}

resources {

cpu = 32

memory = 128

memory_max = 256

}

}

restart {

attempts = 10

delay = "30s"

}

}

}

EOHCL

}

To adapt this for automatically creating ephemeral environments, we’ll now need to consider how to handle:

- Terraform state

- Isolating deployments

- Docker images

Isolating deployments

We will provide a variable to Terraform, which can be used to isolate: the domain, the nomad job and the Docker image.

Gitlab provides us with the variable: $CI_COMMIT_REF_NAME, which can be passed in when running Terraform.

We will also use the short commit hash to build a unique docker tag.

# Backend required for Gitlab state

terraform {

backend "http" {

}

}

variable pull_request {

description = "Name of pull request branch"

type = string

}

variable docker_image {

description = "Docker image"

type = string

}

resource "nomad_job" "terrareg" {

jobspec = <<EOHCL

job "terrareg-${var.pull_request}" {

datacenters = ["dc1"]

...

service {

# We will need to update this

name = "terrareg-${var.pull_request}"

port = "http"

...

image = "${var.docker_image}"

...

PUBLIC_URL = "https://terrareg-${var.pull_request}.gitlab-ci.dockstudios.co.uk"

The unique job will allow the terraform to deploy indepdent jobs that do not conflict in nomad.

Docker image

To easily create docker images that will be used by nomad, without having to worry about a docker registry (and cleaning up the registry after use), we can configure the nomad server as a Gitlab runner.

The runner can run on nomad:

resource "nomad_job" "gitlab-agent" {

jobspec = <<EOHCL

job "gitlab-agent" {

datacenters = ["dc1"]

type = "service"

group "gitlab-agent" {

count = 1

volume "docker-sock-ro" {

type = "host"

read_only = true

source = "docker-sock-ro"

}

ephemeral_disk {

size = 105

}

task "agent" {

driver = "docker"

config {

image = "gitlab/gitlab-runner:latest"

volumes = [

"/var/run/docker.sock:/var/run/docker.sock",

]

entrypoint = ["/usr/bin/dumb-init", "local/start.sh"]

}

env {

RUNNER_TAG_LIST = "nomad"

REGISTER_LOCKED = "true"

CI_SERVER_URL = "${data.vault_kv_secret_v2.gitlab.data["url"]}"

REGISTER_NON_INTERACTIVE = "true"

REGISTER_LEAVE_RUNNER = "false"

# Obtain registration token from vault secret

REGISTRATION_TOKEN = "${data.vault_kv_secret_v2.gitlab.data["registration_token"]}"

RUNNER_EXECUTOR = "docker"

DOCKER_TLS_VERIFY = "false"

DOCKER_IMAGE = "ubuntu:latest"

DOCKER_PRIVILEGED = "false"

DOCKER_DISABLE_ENTRYPOINT_OVERWRITE = "false"

DOCKER_OOM_KILL_DISABLE = "false"

DOCKER_VOLUMES = "/var/run/docker.sock:/var/run/docker.sock"

DOCKER_PULL_POLICY = "if-not-present"

DOCKER_SHM_SIZE = "0"

http_proxy = "${local.http_proxy}"

https_proxy = "${local.http_proxy}"

HTTP_PROXY = "${local.http_proxy}"

HTTPS_PROXY = "${local.http_proxy}"

NO_PROXY = "${local.no_proxy}"

no_proxy = "${local.no_proxy}"

RUNNER_PRE_GET_SOURCES_SCRIPT = "git config --global http.proxy $HTTP_PROXY; git config --global https.proxy $HTTPS_PROXY"

CACHE_MAXIMUM_UPLOADED_ARCHIVE_SIZE = "0"

}

# Custom entrypoint script to register and run agent

template {

data = <<EOF

#!/bin/bash

set -e

set -x

# Register runner

/entrypoint register

# Start runner

/entrypoint run --user=gitlab-runner --working-directory=/home/gitlab-runner

EOF

destination = "local/start.sh"

perms = "555"

change_mode = "noop"

}

resources {

cpu = 32

memory = 256

}

}

}

}

EOHCL

}

Some things to note about this:

A custom entrypoint needed to be created to allow the container to register and then run as the agent.

The runner is configured with docker pull policy “if-not-present”. This allows us to build images locally and re-use them, without Gitlab checking a registry to verify that it’s up-to-date.

Vault was used for storing the registration token and Gitlab URL. Nomad, in this instance, is not integrated with vault - this completely isolates the PR environment from pre-existing vault clusters.

Gitlab pipelines

Gitlab has its own state Terraform management, which we can use for storing state between jobs that will deploy and destroy the nomad job.

There are components available for Gitlab for OpenTofu (https://gitlab.com/components/opentofu) (the Terraform version is being deprecated due to the license change). However, since the instance of Gitlab that I’m using does not support components, I will need to hand-roll the pipelines and will re-use their deployment script.

In the deployment Terraform, I added the gitlab-terraform script (https://gitlab.com/gitlab-org/terraform-images/-/blob/master/src/bin/gitlab-terraform.sh), though they do also have a gitlab-opentofu alternative (https://gitlab.com/components/opentofu/-/blob/main/src/gitlab-tofu.sh).

Adding the build, deployment and teardown for PRs, the following was added to the Gitlab CI yaml of the open source project:

.pr_deployments:

variables:

# Configure base domain that the

# environments will be using

APP_DOMAIN: gitlab-pr.dockstudios.co.uk

# State variable, which will isolate the

# state based on the branch name

TF_STATE_NAME: $CI_COMMIT_REF_SLUG

# Populate the terraform pull_request

# variable that will be passed to the

# deployment terraform

TF_VAR_pull_request: $CI_COMMIT_REF_SLUG

# Variable for docker tag

TF_VAR_docker_image: "terrareg:v${CI_COMMIT_SHORT_SHA}"

# Some custom proxies for nomad

http_proxy: http://some-proxy-for-nomad

# etc

stages:

# Interleaved with pre-existing stages

- build

- deploy

# Perform docker build of the application

# on the nomad host during 'build' stage

build-pr-image:

stage: build

extends: .nomad_proxy

# Use tags to limit to the nomad runner

tags: [nomad]

rules:

# Using the pipeline source of 'merge_request_event'

# instead of 'push' means that the PR environment

# will only be created when a PR is created/updated,

# instead of every branch.

- if: $CI_PIPELINE_SOURCE == 'push'

# Use docker without docker-in-docker, as we're

# passing through the docker socket

image: docker:latest

script:

# Build and tag using the pull_request variable (branch name)

- docker build -f Dockerfile -t terrareg:$TF_VAR_pull_request .

# Deploy the PR to nomad using Terraform

deploy_review:

stage: deploy

extends: .nomad_proxy

tags: [nomad]

needs: [build-pr-image]

rules:

- if: $CI_PIPELINE_SOURCE == 'push' && $CI_COMMIT_REF_NAME != $CI_DEFAULT_BRANCH

# Use the Hashicorp docker image,

# but override entrypoint, as it defaults

# to the Terraform binary

image:

name: hashicorp/terraform:1.5

entrypoint: ["/bin/sh", "-c"]

dependencies: []

# Configure Gitlab environment for the branch

environment:

name: review/$CI_COMMIT_REF_NAME

# The URL provided to the user in Gitlab

# to view the environment.

# Obtained from Terraform output

url: $DYNAMIC_ENVIRONMENT_URL

auto_stop_in: 1 week

# Reference the job that is used to stop the environment

on_stop: stop_review

# Pass additional variables to authenticate to nomad.

variables:

NOMAD_ADDR: ${NOMAD_ADDR}

NOMAD_TOKEN: ${NOMAD_TOKEN}

script:

# Clone the Terraform repo for project and cd into directory

- git clone https://gitlab.dockstudios.co.uk/pub/terra/terrareg-nomad-pipeline

- cd terrareg-nomad-pipeline

# Install idn2-utils, as the Gitlab script

# uses this to determine environment variable names

# for authentication

- apk add idn2-utils

# Perform Terraform plan and apply.

- ./gitlab-terraform plan

- ./gitlab-terraform apply

- echo "DYNAMIC_ENVIRONMENT_URL=https://$(./gitlab-terraform output -json | jq -r '.domain.value')" >> ../deploy.env

artifacts:

reports:

# Use dotenv-style output file as artifact, to

# read Terraform outputs

dotenv: deploy.env

# On stop review, this can be run manually,

# or will be executed when the environment is stopped by Gitlab.

stop_review:

stage: deploy

extends: .pr_deployment

tags: [nomad]

rules:

- if: $CI_PIPELINE_SOURCE == 'push' && $CI_COMMIT_REF_NAME != $CI_DEFAULT_BRANCH

when: manual

# This is important when PRs enable "Pipeline must succeed before merging".

# Setting allow_failure stops the manual "stop" job from blocking the PR

# from being merged, since Gitlab will automatically run the stop job

# when the branch is deleted.

allow_failure: true

image:

name: hashicorp/terraform:1.5

entrypoint: ["/bin/sh", "-c"]

variables:

# Use Git strategy none, as the branch

# will no longer exist and if the job

# attempts to check it out, it would fail.

GIT_STRATEGY: none

NOMAD_ADDR: ${NOMAD_ADDR}

NOMAD_TOKEN: ${NOMAD_TOKEN}

environment:

name: review/$CI_COMMIT_REF_NAME

action: stop

script:

- git clone https://gitlab.dockstudios.co.uk/pub/terra/terrareg-nomad-pipeline

- cd terrareg-nomad-pipeline

- apk add idn2-utils

# Perform Terraform destroy

- ./gitlab-terraform destroy

There are three new jobs:

- Docker build on nomad server - this uses the

tagsto limit to the Gitlab runner on the nomad server deploy_review- this clones the Terraform repo, runs Terraform plan/apply.- Since Terraform manipulates the domain to ensure it is not too long, the Terraform output is used to populate a dotenv file.

- The dotenv file is read as an

artifact, which populates gitlab variables, which is then used for theenvironment.reviewvalue

stop_review- Perform Terraform destroy. This is configured as amanualjob, as referenced as theon_stopjob in thedeploy_review- Setting

allow_failureensures that the PRs do not show the pipeline as running forever (waiting for the job to complete)

- Setting

Once this is done, the Gitlab variables need populating for:

- NOMAD_ADDR

- NOMAD_TOKEN

As this pipeline executes, it runs:

- Build

- Tests

- PR deployment (whilst tests are running)

Due to the tests taking a long time and to allow proposed changes to be demonstrated without tests being updated, this situation was ideal.

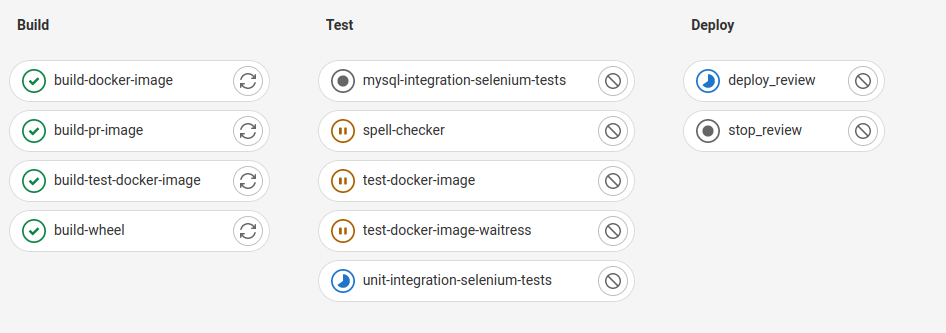

After an initial deployment, we can see the pipeline with the new jobs:

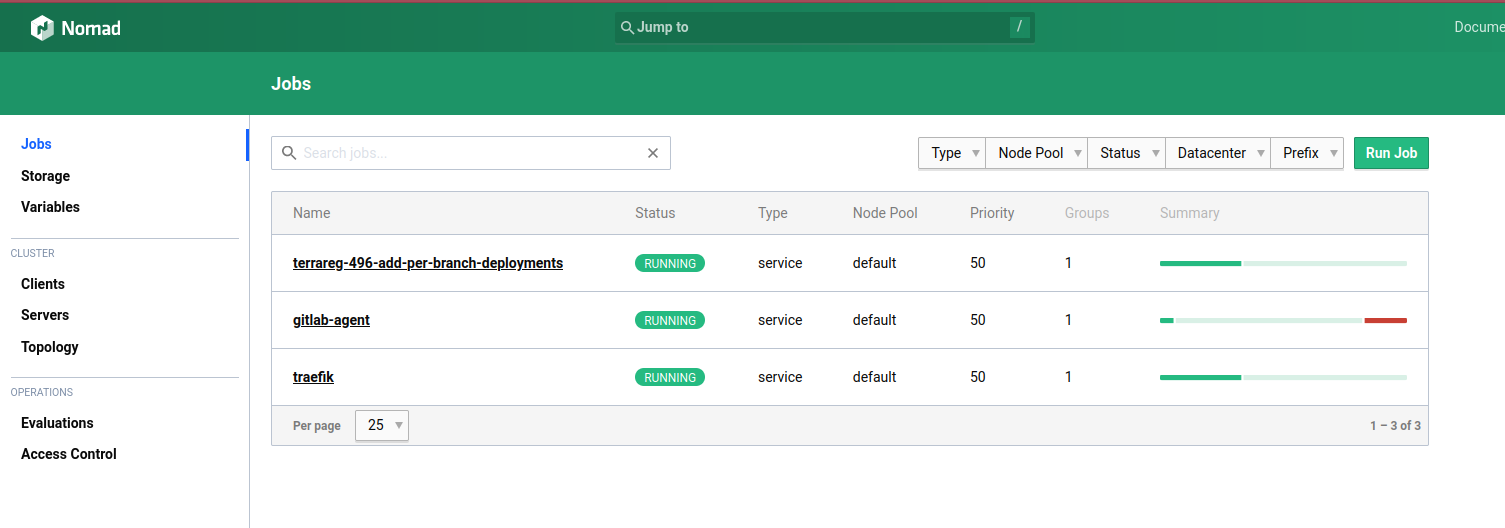

And the successful deployment of the branch in Nomad:

SSL certificates

Whilst this is a little off-topic for the task at hand, to get the SSL certificate assigned was an interesting task:

- Historically, I purchased SSL certificates, as everyone did, including wildcard certs

- Now, I use letsencrypt for all certificates.

I hadn’t attempted to obtain a wildcard SSL certificate from letsencrypt.

Since I use bind for public DNS, I found an interesting article that outlined a good setup: https://blog.svedr.in/posts/letsencrypt-dns-verification-using-a-local-bind-instance/

The setup was quite interesting:

- Create a new zone that covered the ACME validation subdoain

- Allow dynamic updates using a secret

- Use a certbot plugin that can publish updates to bind whilst requesting the SSL certificate

I initially attempted to create a zone for the whole gitlab-pr subdomain. However, during the verification process, I received errors that a SOA record for the _acme-challenge subdomain could not be found - forcing another new zone to be created for this.

Importing test data

With the new environments being automatically created, I wanted to automatically load in some test data into the environment, giving a better out-of-the-box experience.

I implemented a small batch task, alongside the main application task:

resource "nomad_job" "terrareg_data_import" {

jobspec = <<EOHCL

job "terrareg-${var.pull_request}-data-import" {

type = "batch"

group "import-data" {

count = 1

task "import-data-task" {

template {

data = <<EOH

{{ range nomadService "terrareg-${var.pull_request}" }}

base_url="http://{{ .Address }}:{{ .Port }}"

# Wait for API to be ready

for itx in {1..10}

do

curl $base_url && break

sleep 5

done

function make_request() {

endpoint=$1

data=$2

curl $base_url$endpoint \

-XPOST \

-H 'Content-Type: application/json' \

-H 'X-Terrareg-ApiKey: GitlabPRTest' \

-d "$data"

}

# Create namespace

make_request "/v1/terrareg/namespaces" '{"name": "demo"}'

# Create module

make_request "/v1/terrareg/modules/demo/rds/aws/create" '{"git_provider_id": 1, "git_tag_format": "v{version}", "git_path": "/"}'

# Import version

make_request "/v1/terrareg/modules/demo/rds/aws/import" '{"version": "6.4.0"}'

{{ end }}

EOH

destination = "local/setup.sh"

perms = 755

}

driver = "docker"

config {

image = "quay.io/curl/curl:latest"

entrypoint = ["/bin/sh"]

command = "/local/setup.sh"

}

env {

# Force job re-creation

FORCE = "${uuid()}"

}

resources {

cpu = 32

memory = 32

memory_max = 128

}

}

}

}

EOHCL

purge_on_destroy = true

detach = false

rerun_if_dead = true

depends_on = [nomad_job.terrareg]

}

Since the configuration is all performed via API calls, I used the curl docker image, replacing the entrypoint with a shell script, which is dynamically generated using a template. The template allows the injection of the IP/port of the main container.

The uuid() environment variable ensures that the job is re-created on each run. By default, the batch job is only run once after creation, which suits this application perfectly.

Summary

For me, the combinations of these technologies has been really useful:

Nomad is very easy to setup and can be easily used in an isolated enivronment. Deploying to nomad is also incredibly easy, making a change like this easy to implement.

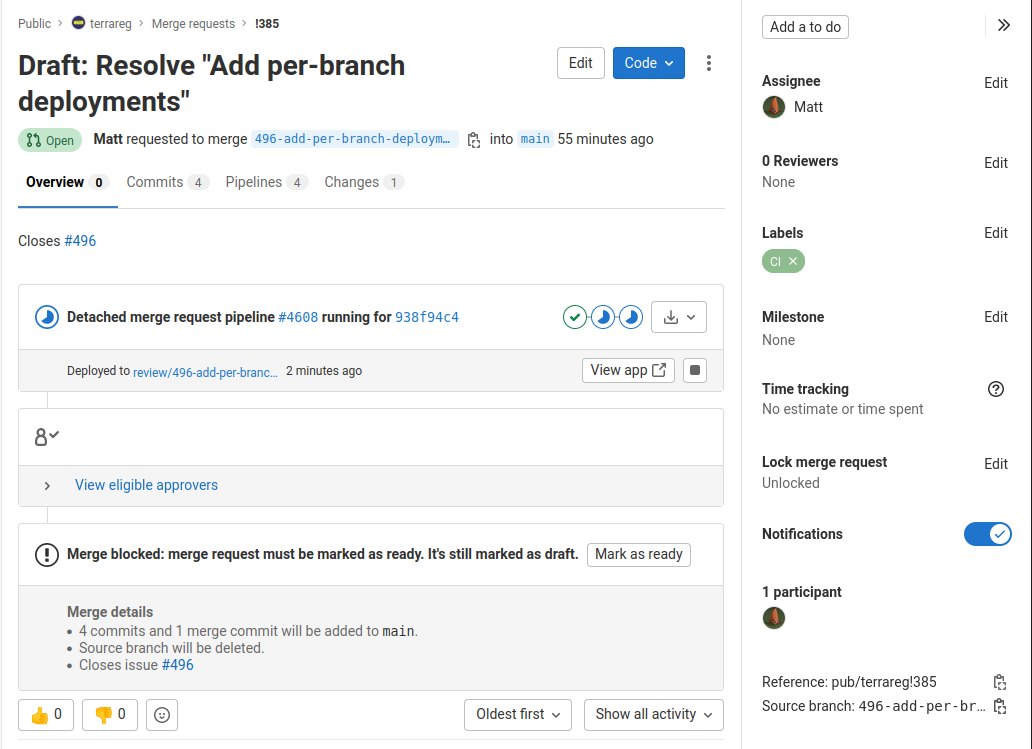

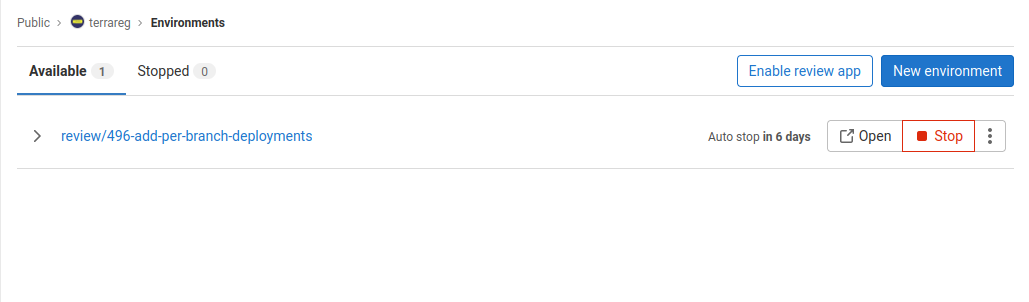

Gitlab’s integration with review environments is nice and attaches itself into pull-requests:

The functionality for dynamic environment generation and automated teardown is incredibly useful - not something you’d find on many deployment tools.

Overall, this change took around half a day to get working end-to-end and will provide a great benefit for this project - and should also be easy to adopt with other projects.

To see the complete example, see:

- The changes to the open source application: https://gitlab.dockstudios.co.uk/pub/terrareg/-/merge_requests/385

- The Terraform code for the release: https://gitlab.dockstudios.co.uk/pub/terra/terrareg-nomad-pipeline